Google Sheets is one of Google’s most popular products. It is part of the popular G Suite suite, which includes other tools such as Google Docs, Google Forms, Google Slides, and Google Drive. All these tools are stored in the cloud and can be accessed from anywhere.

Most of these services are similar to Microsoft Office tools such as Microsoft Word and Excel. But what makes them popular is that they are free and do not change, unlike Microsoft Office. Plus, they are not tied to a computer, as they are saved in the cloud.

Google offers a great API service for Google Sheets. With version 4, which was released in 2016, we can now perform all tasks in one place. Previously, we were limited to reading and writing in cells, but now we can filter views, create tables, and perform all sorts of other tasks.

In this article, we will explore the limits of the Google Sheets API. When developing a production application that uses Google Sheets as a database to store data, we often reach this frequency limit. So let’s see what this limit is and how to avoid it.

Is Google Sheets API Free?

The first question that comes to mind for anyone deciding to use the Google Sheets API is whether it is free.

Yes, it is completely free, but it has a limit. This limit has been set to protect Google APIs from hackers. These hackers hit the API endpoints with a large number of requests to bring them down.

According to the documentation, there is a limit per minute per project.

What is the Google Sheets API Limit?

Now, the next question is about the limit of the Google Sheets API. The frequency limit of the Google Sheets API is shown below. The official documents can be found here. According to the documentation, there is a limit per minute per project.

We can see that with per day per project, we get unlimited read and write requests.

Exceeding a Frequency Limit

In larger production applications, you will make an API call from the same service account with the same user. In these cases, you often exceed the frequency limit of 60. If the quota limit is exceeded, you will get the error code 429: Too Many Requests.

In this scenario, you need to use an exponential backoff algorithm, as suggested in the official documentation here. In the exponential backoff algorithm, you need to write code that captures exceptions. After capturing the exception, you need to retry.

Now, if the API request fails again, the program should retry after increasing the request time. If it fails another time, we increase the API request time again and retry. We need to continue increasing the time and retrying until we succeed.

Implementing the Exponential Backoff Algorithm

Here is the implementation of the exponential backoff algorithm in Node.js. You can find all other implementations in all major languages here.

Now, you need to first provide your Google Cloud storage bucket and its ID. Then, import the main storage function from Google.

Next, create a new storage instance and pass it an object. This object contains some important parameters required by the storage class. These parameters are:

autoRetry– This must be set to true for the retry to happen.retryDelayMultiplier– This is the multiplier that increases the delay time after each failed request.totalTimeout– This represents the time between an initial request and its expiration.maxRetryDelay– This is the maximum duration allowed. Once reached, the retry delay multiplier will not be used.maxRetries– This is the maximum number of retries allowed.idempotencyStrategy– This indicates what type of operation we have. If set to RetryAlways, it will follow all the above options.

const bucketName = 'your-unique-bucket-name';

const fileName = 'your-file-name';

const { Storage } = require('@google-cloud/storage');

const storage = new Storage({

retryOptions: {

autoRetry: true,

retryDelayMultiplier: 3,

totalTimeout: 500,

maxRetryDelay: 60,

maxRetries: 5,

idempotencyStrategy: IdempotencyStrategy.RetryAlways,

},

});

console.log('Functions are customized to be retried based on the following parameters:');

console.log(`Auto Retry: ${storage.retryOptions.autoRetry}`);

console.log(`Retry Delay Multiplier: ${storage.retryOptions.retryDelayMultiplier}`);

console.log(`Total Timeout: ${storage.retryOptions.totalTimeout}`);

console.log(`Max Retry Delay: ${storage.retryOptions.maxRetryDelay}`);

console.log(`Max Retries: ${storage.retryOptions.maxRetries}`);

console.log(`Idempotency Strategy: ${storage.retryOptions.idempotencyStrategy}`);

async function deleteFileWithCustomizedRetrySetting() {

await storage.bucket(bucketName).file(fileName).delete();

console.log(`File ${fileName} deleted with a customized retry strategy.`);

}

deleteFileWithCustomizedRetrySetting();Checking Quota Limits

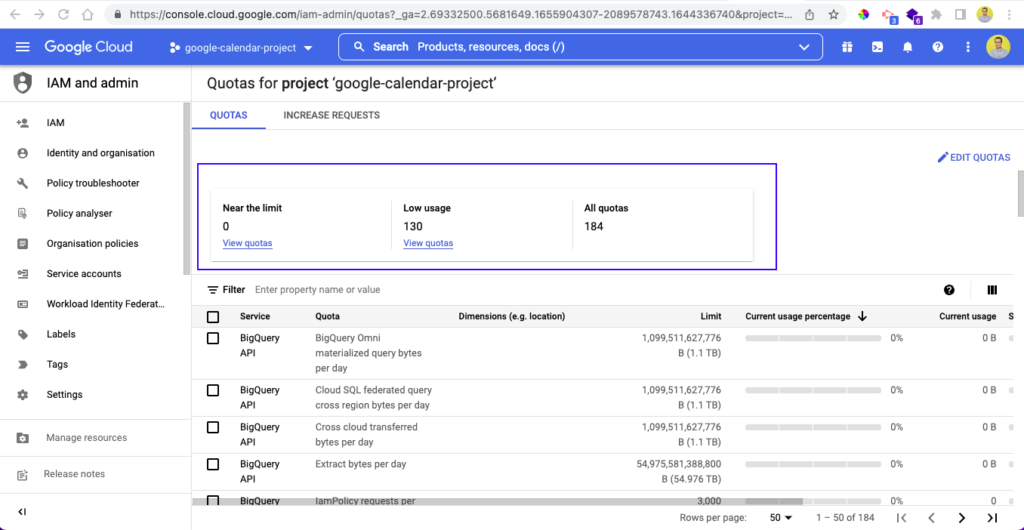

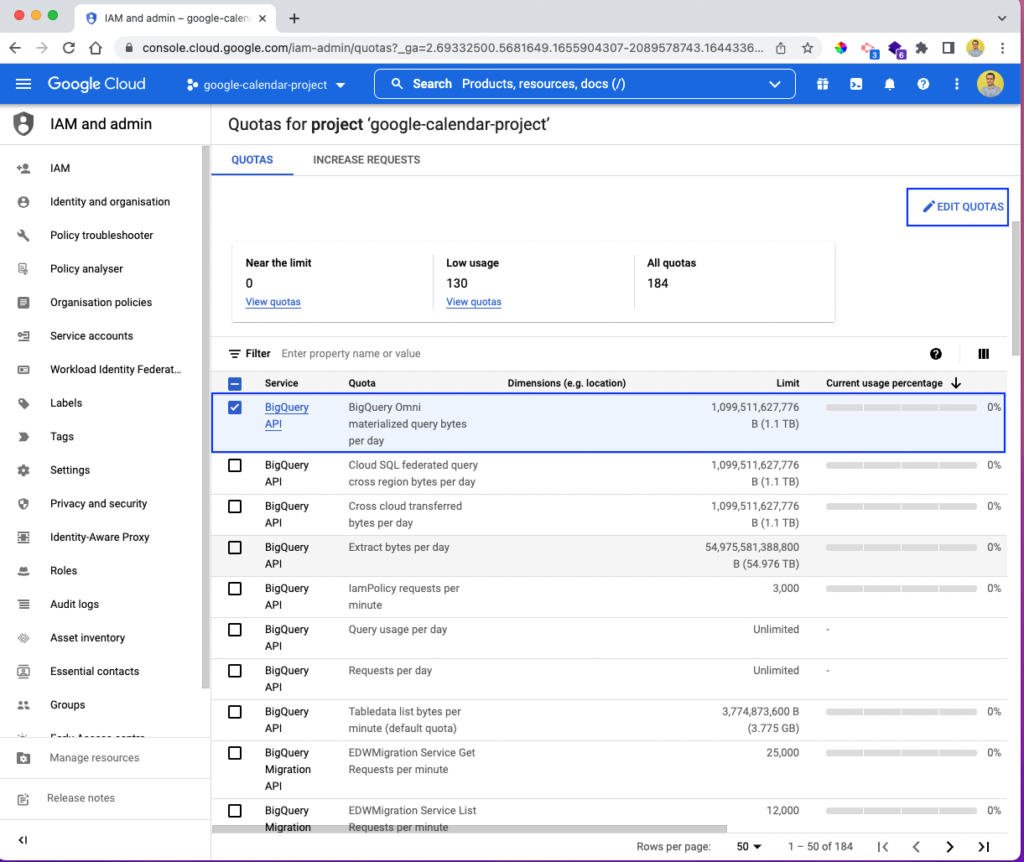

You can check your application’s quotas by logging in to the Google Cloud Console via this link. This will show the quota of the last running application.

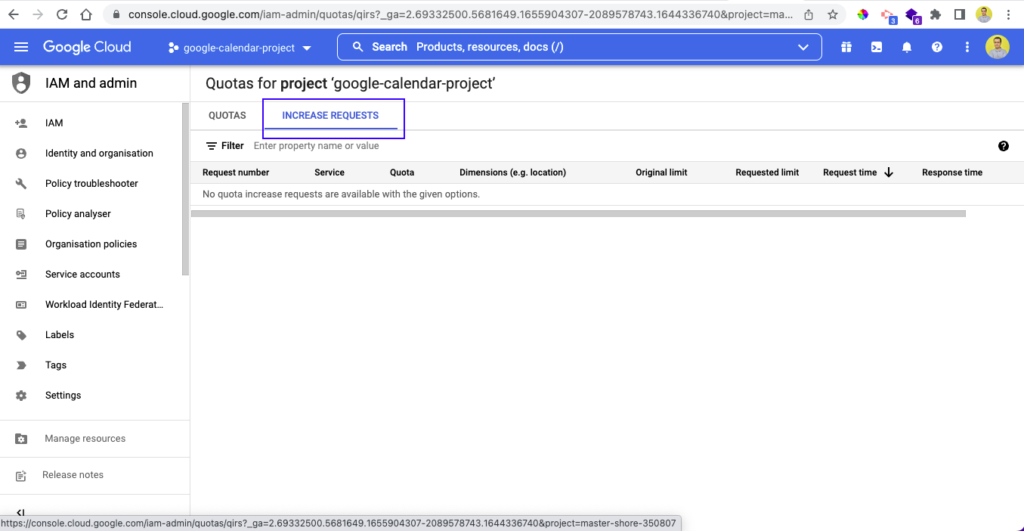

You can also see the REQUESTS FOR INCREASE tab next to the QUOTAS tab. By clicking on it, you will find all the increase requests that have been made.

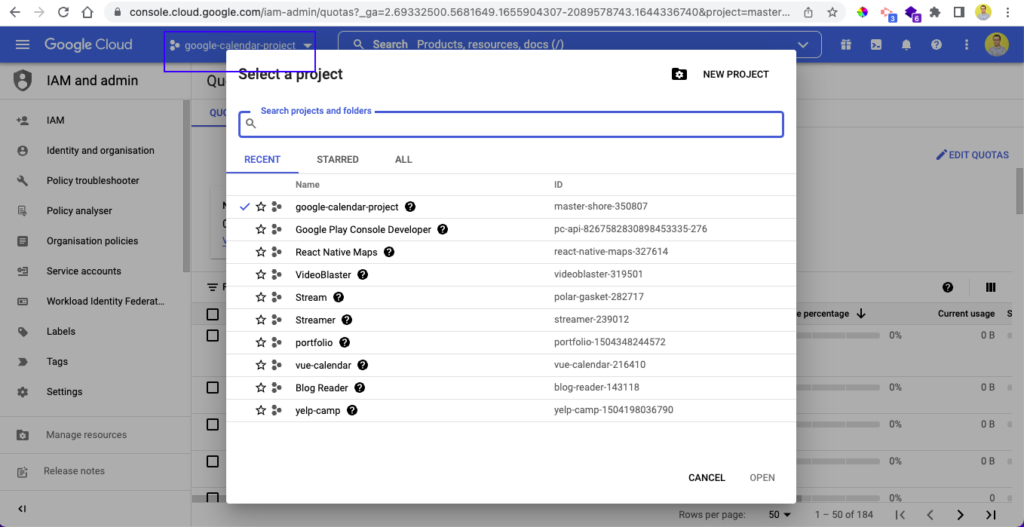

To check the quota limit of a different project, simply click the dropdown arrow of the project. Then, a popup window will open where you can click on other projects to see their quota.

How to Increase Google Sheets API Limit?

Now, if your limit is reached, what is the procedure to increase the Google Sheets API limits?

We simply have to request it if we want Google to increase the quota limit. And according to Google, even if you request a quota increase, it does not guarantee an increase.

In fact, significant quota increases can take a long time to be approved.

When we request a quota increase, it is evaluated by Google’s artificial intelligence system. It checks different factors such as how long we have been using Google Cloud and other elements.

In some cases, the requests are processed by humans, who also follow strict guidelines.

If the request is approved, the user then has to prepay for the quota increase. Quota increase requests are typically resolved within two to three business days.

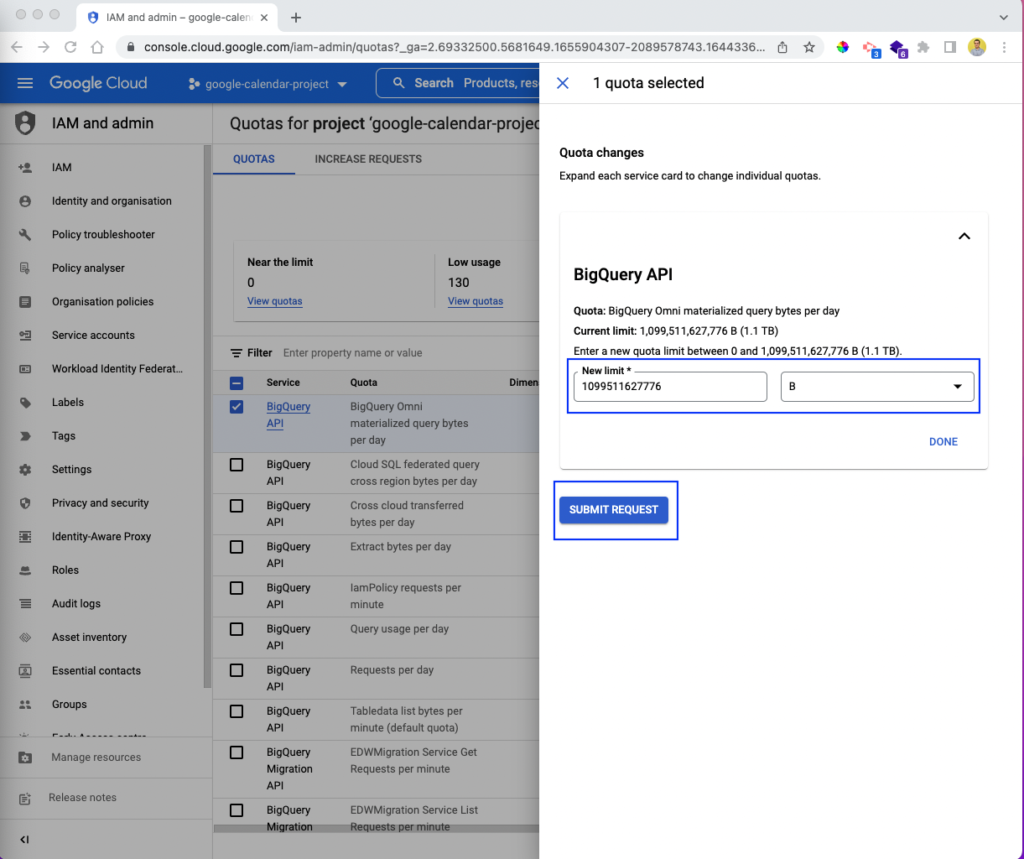

We need to make the quota increase request via the Google Cloud Console. Log in following the steps mentioned above. Then, check the box next to the request indicating that you want to increase the quota. Next, click on EDIT QUOTAS at the top right of the page.

This will open a popup window where you can update the limit to set a new limit. You will also see the metrics bytes, kilobytes, megabytes, gigabytes, terabytes, and petabytes. Give the new updated values and click the SUBMIT REQUEST button.

Conclusion

In this article, we have uncovered the issues of frequency limitation of the Google Sheets API. We first briefly learned about Google Sheets and the freely available API with it.

Thanks to the Google Sheets API, we can perform all the usual tasks of reading and writing in a cell. In addition to that, we can also perform advanced tasks such as view filtering and table creation.

We also learned about the different limits of the Google Sheets API. Then, we learned what happens when we exceed a quota.

Furthermore, we saw in detail the implementation of the exponential backoff algorithm. We also learned how to check quota limits in the Google Cloud Console. Finally, we learned how to increase the quota via the Google Cloud Console. Here, we also learned how Google uses a combination of artificial intelligence and human operators to approve our quota increase requests.

Before you go, don’t forget to subscribe to our email updates or follow us on Twitter at @bolamarketing.com to stay informed about our latest content! Also, take a look at Crawlan.com, a site full of resources to help you improve your online presence.

Let us know what you think. Bye for now! 👋

This article was written by Nabendu Biswas. Nabendu has been working in the software industry for 15 years, starting as a C++ developer and then moving into databases. Over the past six years, he has worked as a web developer in the JavaScript ecosystem, building web applications in ReactJS, NodeJS, and GraphQL. He enjoys blogging about what he learns and works on.